With the foundations of usability and search engine visibility in mind, it is time to turn to making it all presentable with the design of the Web site.

Looks may not matter to search engines, but they go a long way toward assuring visitors of your credibility and turning them into customers.

Every Web site needs to be designed with clear goals (or conversions) in mind. Conversions take many forms and may include the following:

Before designing a Web site, research your audience and competitors to determine expectations and common elements to your industry. Mock up every layer of interaction. This means that before any coding begins, there is clear map of how the Web site should work. It’s all about foundations.

Here are some of the cues that visitors use to determine the credibility of a Web site:

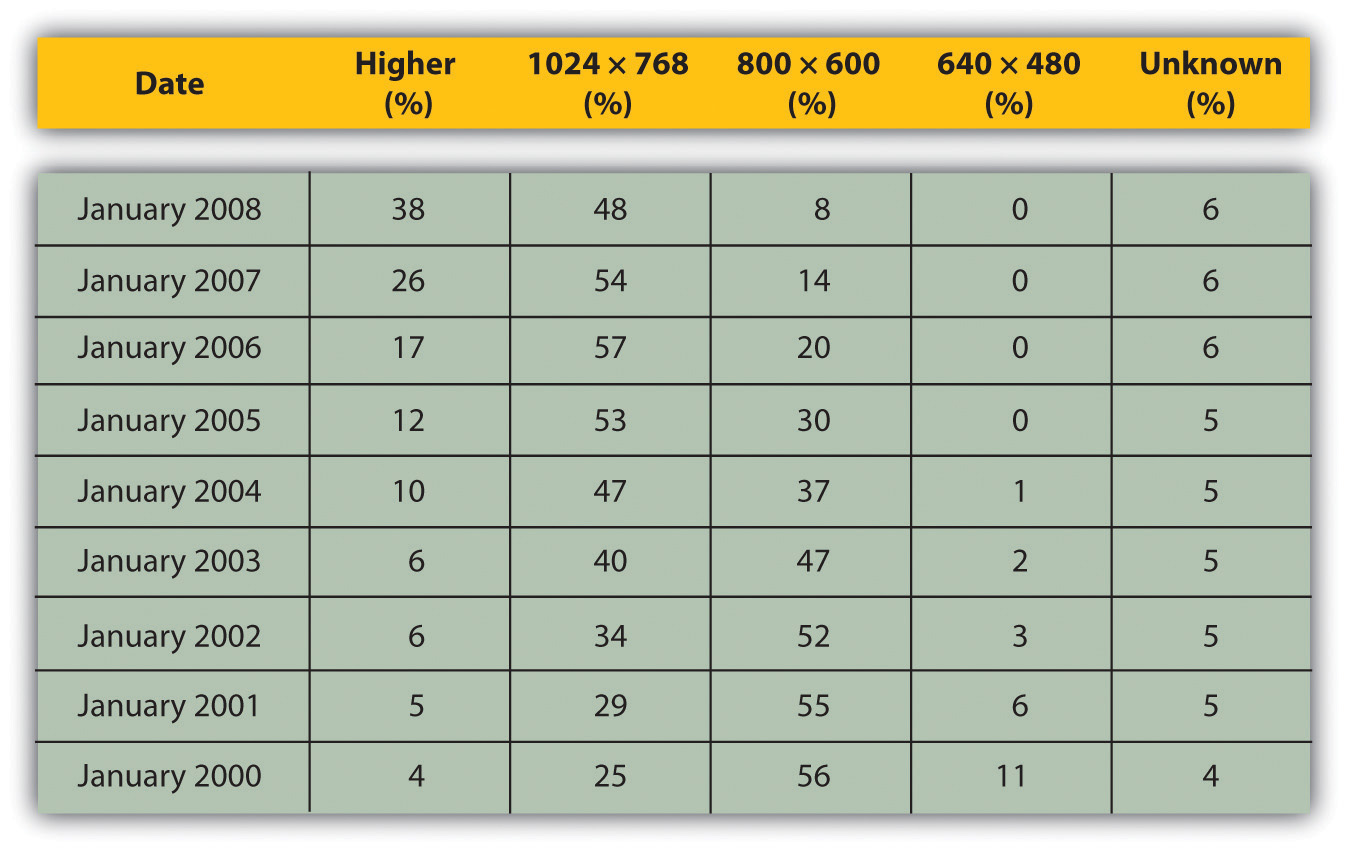

Design also affects the accessibility of a Web site. You need to take into account screen resolutions, as designing for the biggest screen available could leave many of your users scrolling across and down to see the Web page. Subtle shading, background colors to text, and fancy fonts can also mean that many users cannot even see your Web site properly.

Figure 13.6

Figures show that screen resolution just keeps getting higher.

Source: Based on information from http://www.w3schools.com.

Screens just keep getting bigger, so does that mean that Web sites should as well? What about users who never hit the “maximize” button on their browser? How effective do you think sales data for laptops are in determining optimal screen resolution?

A cascading style sheet (CSS) is defined by W3C (World Wide Web Consortium) as “a simple mechanism for adding style (e.g., fonts, colors, spacing) to Web documents.”“Cascading Style Sheets Home Page,” World Wide Web Consortium, June 3, 2010, http://www.w3c.org/Style/css (accessed June 23, 2010).

In the early days of the Web, designers tended to use tables to lay out content for a Web page, and many Web sites still do so today. However, different browsers, and even different versions of browsers, all support code differently, resulting in Web sites that only work on certain browsers or bulky code needed to cope with all the different versions.

The W3C (http://www.w3.org) was created in 1994 and since then has been responsible for specifications and guidelines to promote the evolution of the Web, while ensuring that Web technologies work well together. The Web Standards Project (http://www.webstandards.org) launched in 1998 and labeled key guidelines as “Web standards.” Modern browsers should be built to support these standards, which should vastly reduce cross-browser compatibility problems, such as Web sites displaying differently in different browsers.

Web standards include the following:

CSS is standard layout language. It controls colors, typography, and the size and placement of elements on a Web page. Previously, Web developers have had to create instructions for every page in a Web site. With CSS, a single file can control the appearance of an entire site.

CSS allows designers and developers to separate presentation from content. This has several key benefits:

CSS can also do the following:

To see CSS in action, visit http://www.csszengarden.com, where you can make a single HTML page look very different, depending on which one of the many designer-contributed style sheets you apply to it.

As the name implies, a content management system (CMS) is used to manage the content of a Web site. If a site is updated frequently and if people other than Web developers need to update the content of a Web site, a CMS is used. Today, many sites are built on a CMS. The CMS can also allow content of a Web site to be updated from any location in the world.

A CMS can be built specifically for a Web site, and many Web development companies build their own CMS that can be used by their clients. A CMS can also be bought prebuilt, and there are many open-sourceUnlike proprietary software, open-source software makes the source code available so that other developers can build applications for or even improve the software., prebuilt CMSs available, some of which are free.

A CMS should be selected with the goals and functions of the Web site in mind. A CMS needs to be able to scale along with the Web site and business that it supports, and not the other way around.

Of course, the CMS selected should result in a Web site that is search engine friendly.

Here are some key features to look out for when selecting or building a CMS:

URLs. Instead of using dynamic parameters, the CMS should allow for server-side rewriting of URLs (uniform resource locators). It should allow for the creation of URLs that have the following characteristics:

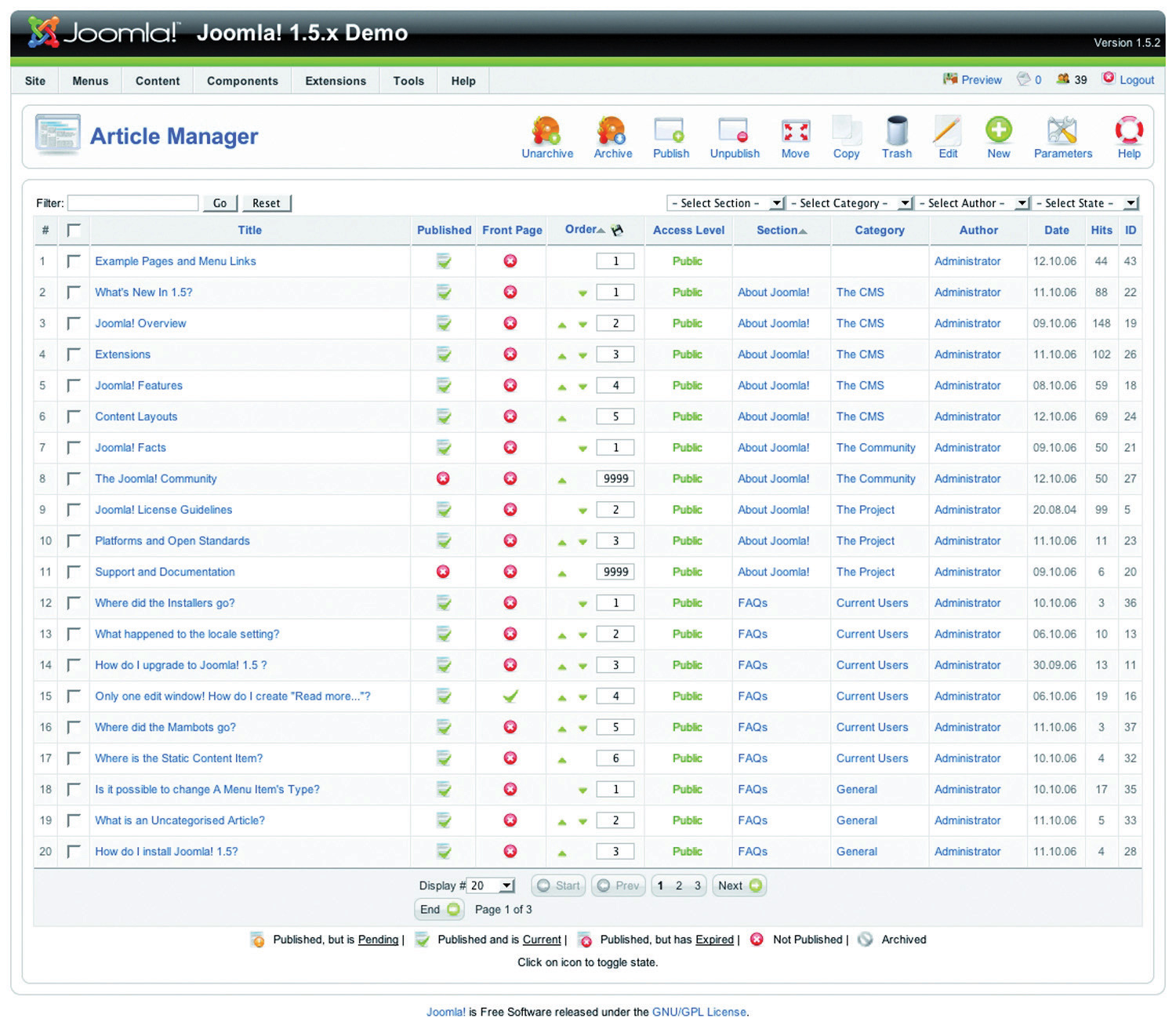

Figure 13.7

Joomla! is an open-source content management system (CMS). Here you can see how the CMS allows you to manage the articles on the Web site.

Be aware when building clean, descriptive, and dynamic URLs from CMS content. Should you use a news heading (“Storm” in this example) as part of your URL (http://www.websitename.com/cape/storm) and someone changes the heading to “Tornado” (http://www.websitename.com/cape/tornado), this will alter the URL and the search engines will index this as a new page, but with the same content as the URL that had the old heading. Bear this in mind before adding dynamic parameters to your URLs.

Finally, using a CMS that supports standards compliant HTML and CSS is very important—without it inconsistencies may be rendered across various browsers. It also ensures faster loading time and reduced bandwidth, makes markup easier to maintain, supports SEO efforts, and ensures that every single visitor to a Web site, no matter what browser they are using, will be able to see everything on the site.

As a whole, technology should act only as an enabler. It should never be a site’s main focus. Here are some technical considerations vital for a good Web site.

Whether you use proprietary or open-source software is an important consideration when building a new site, and all avenues should be explored. Open-source software is fully customizable and benefits from a large developer community. Propriety software usually includes support in its price.

It is vital that important URLs in your site are indexable by the search engines. Ensure that URL rewriting is enabled according to the guidelines in this chapter. URL rewriting should be able to handle extra dynamic parameters that might be added by search engines for tracking purposes.

Compression helps to speed up download times of a Web page, improving user experience.

Form validation is the process whereby the data entered into a form are verified in order to meet certain preset conditions (e.g., ensuring that the name and e-mail address fields are filled in).

Client-sideOperations that take place before information is sent to the server. validation relies on JavaScript, which is not necessarily available to all visitors. Client-side validation can alert a visitor to an incorrectly filled-in form most quickly, but server-side validation is the most accurate. It is also important to have a tool to collect all the failed tests and present appropriate error messages neatly above the form the user is trying to complete. This will ensure that correctly entered data are not lost but repopulated in the form to save time and reduce frustration.

The Internet has afforded the opportunity to conduct business globally, but this means that Web sites need to make provision for non-English visitors. It is advisable to support international characters via UTF-8 (8-bit unicode transformation format) encoding; both on the Web site itself and in the form data submitted to it.

Sessions can be used to recognize individual visitors on a Web site, which is useful for click-path analysis. Cookies can be used to maintain sessions, but URL rewriting can be used to compensate for users who do not have cookies activated. This means that as visitors move through a Web site, their session information is stored in a dynamically generated Web address.

Why does URL rewriting create a moving target for a search engine spider?

Search engine spiders do not support cookies, so many Web sites will attempt URL rewriting to maintain the session as the spider crawls the Web site. However, these URLs are not liked by search engine spiders (as they appear to create a moving target for the robot) and can hinder crawling and indexing. The work-around: use technology to detect if a visitor to the site is a person or a robot, and do not rewrite URLs for the search engine robots.

Site maps are exceptionally important, both to visitors and to search engines. Technology can be implemented that automatically generates and updates both the human-readable and XML site maps, ensuring spiders can find new content.

Really simple syndication (RSS) is an absolute necessity. With all the millions of Web and blog sites in existence, Web users can no longer afford to spend time browsing their favorite sites to see if new content has been added. By enabling RSS feeds on certain sections on the site, especially those that are frequently updated, users will have the content delivered directly to them. Visitors should be able to pick and choose the sections they like to get updates from via a feed.

A cascading style sheet (CSS) is what gives a site its look and feel. With CSS, the following are true: